Summary

The AI for Content Creation (AI4CC) workshop at CVPR brings together researchers in computer vision, machine learning, and AI. Content creation is required for simulation and training data generation, media like photography and videography, virtual reality and gaming, art and design, and documents and advertising (to name just a few application domains).

Recent progress in machine learning, deep learning, and AI techniques has allowed us to turn hours of manual, painstaking content creation work into minutes or seconds of automated or interactive work.

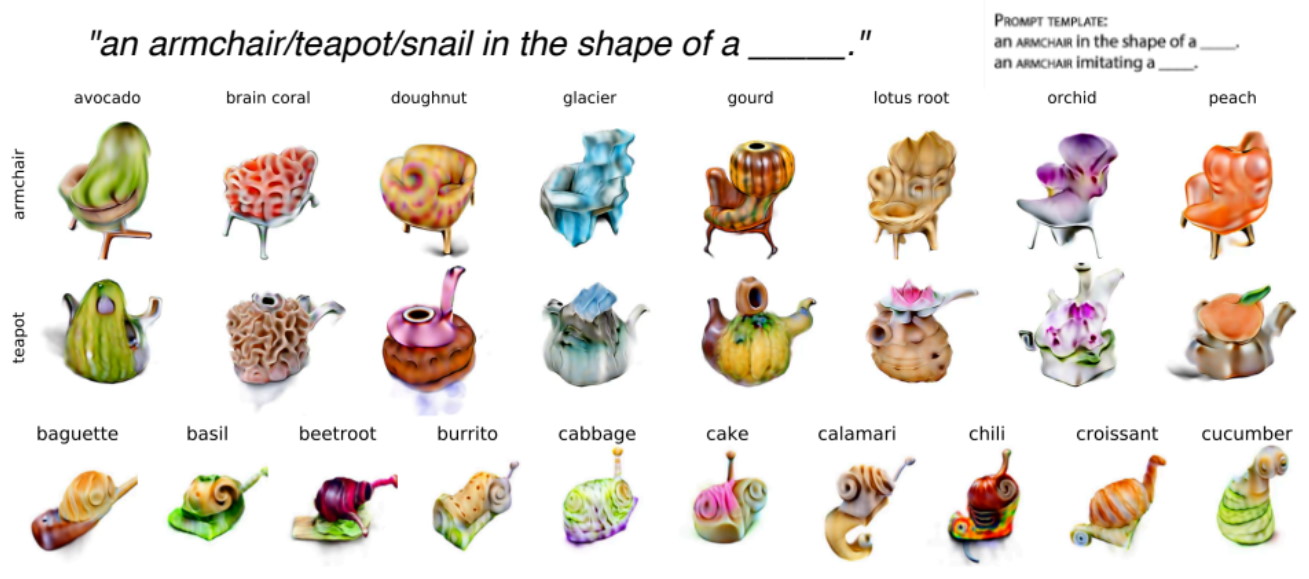

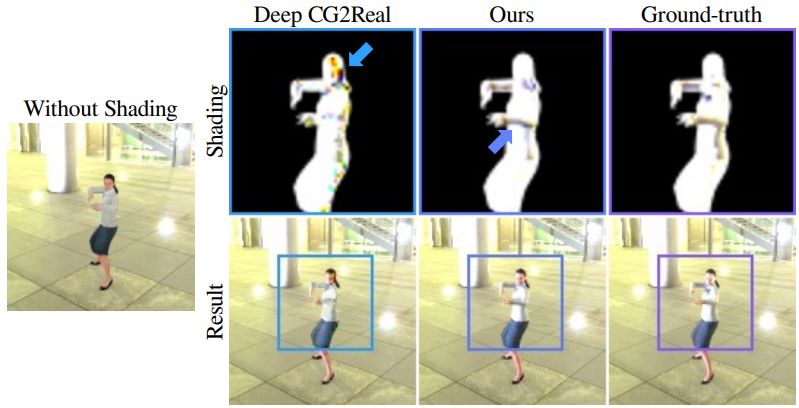

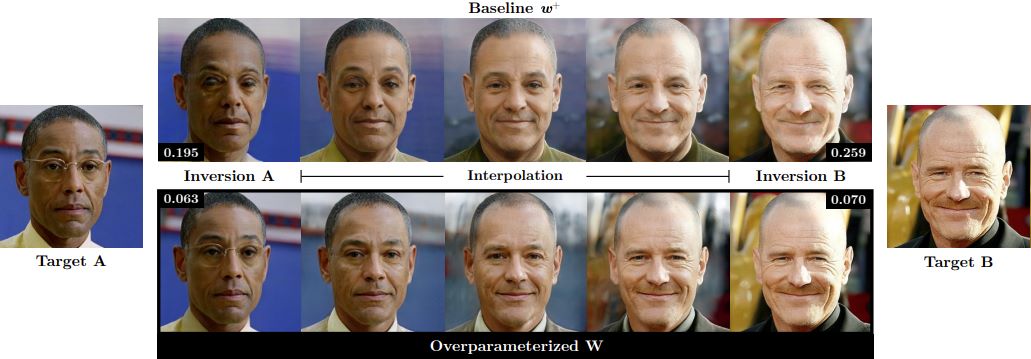

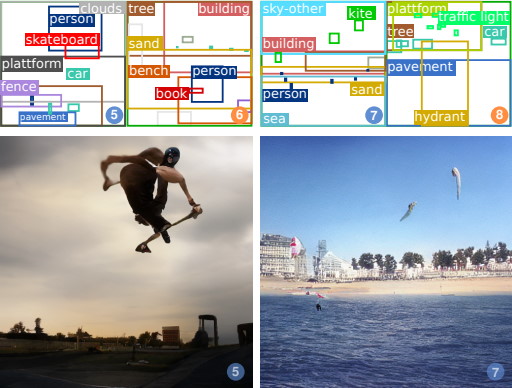

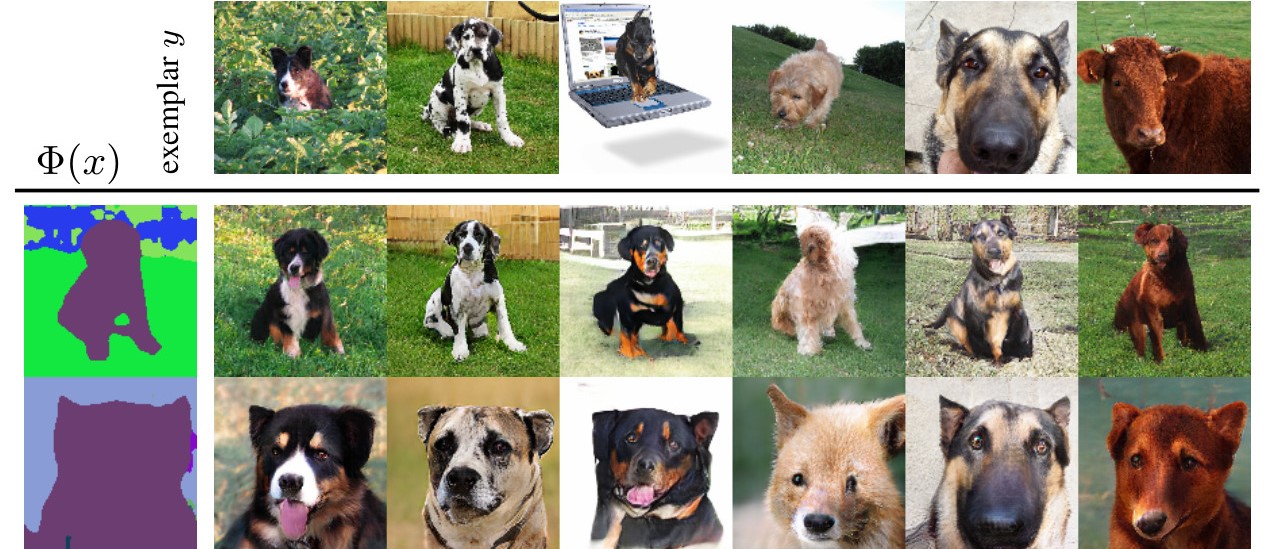

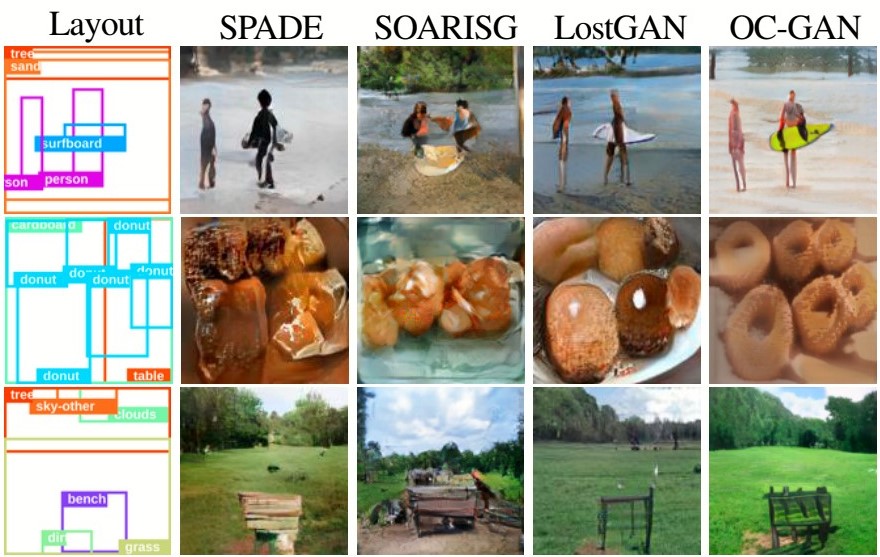

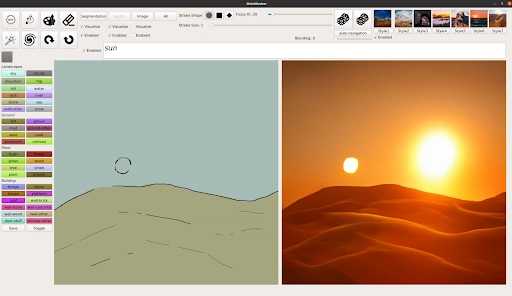

For instance, generative adversarial networks (GANs) can produce photorealistic images of 2D and 3D items such as humans, landscapes, interior scenes, virtual environments, or even industrial designs.

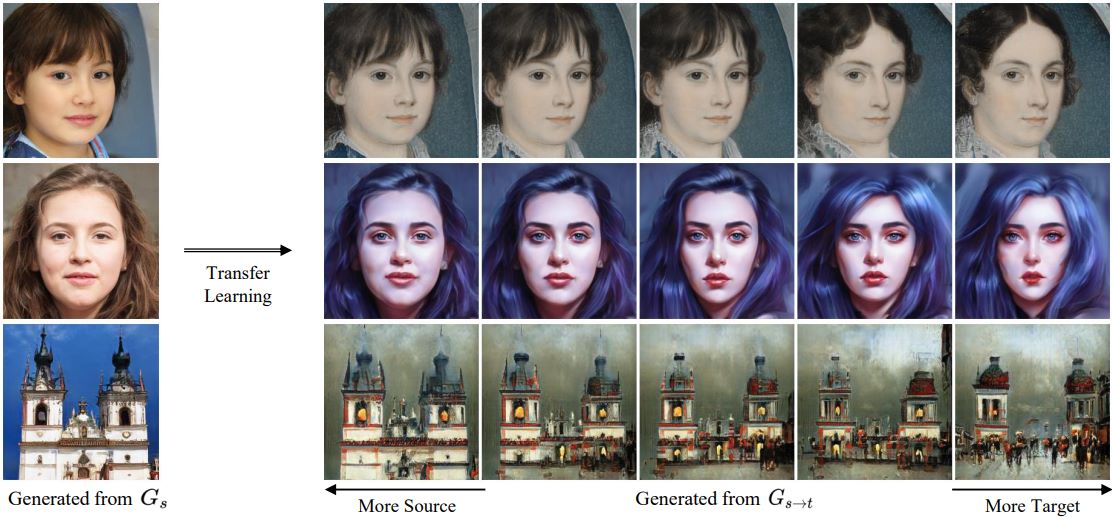

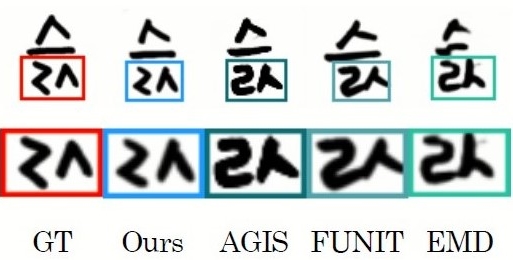

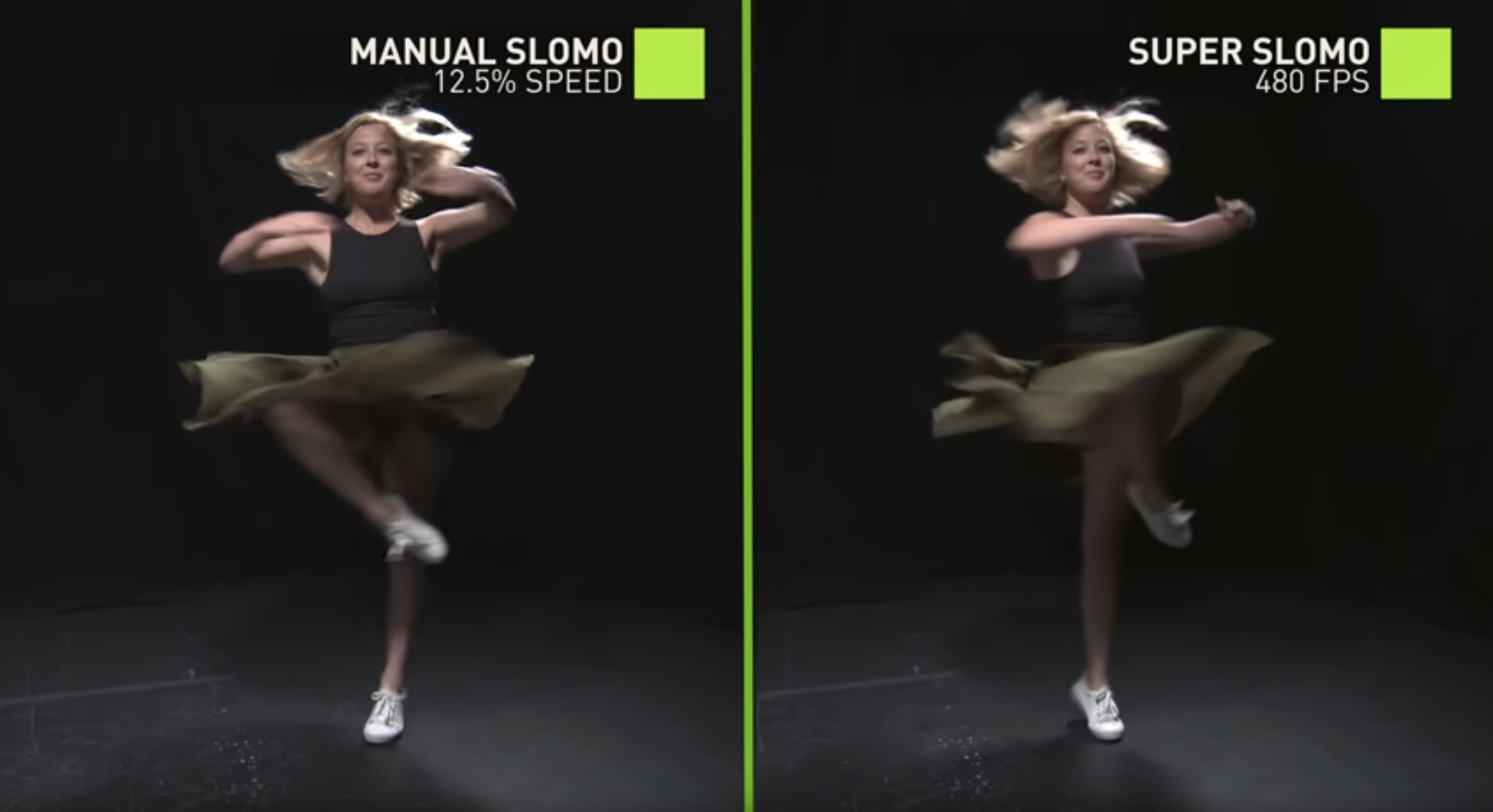

Neural networks can super-resolve and super-slomo videos, interpolate between photos with intermediate novel views and even extrapolate, and transfer styles to convincingly render and reinterpret content.

In addition to creating awe-inspiring artistic images, these offer unique opportunities for generating additional and more diverse training data.

Learned priors can also be combined with explicit appearance and geometric constraints, perceptual understanding, or even functional and semantic constraints of objects.

AI for content creation lies at the intersection of the graphics, the computer vision, and the design community. However, researchers and professionals in these fields may not be aware of its full potential and inner workings. As such, the workshop is comprised of two parts: techniques for content creation and applications for content creation. The workshop has three goals:

- To cover introductory concepts to help interested researchers from other fields start in this exciting area.

- To present success stories to show how deep learning can be used for content creation.

- To discuss pain points that designers face using content creation tools.

More broadly, we hope that the workshop will serve as a forum to discuss the latest topics in content creation and the challenges that vision and learning researchers can help solve.

Welcome! -

Deqing Sun (Google)

Lingjie Liu (University of Pennsylvania)

Fitsum Reda (NVIDIA)

Huiwen Chang (Google)

Lu Jiang (Google)

Seungjun Nah (NVIDIA)

Yijun Li (Adobe)

Ting-Chun Wang (NVIDIA)

Jun-Yan Zhu (Carnegie Mellon University)

James Tompkin (Brown University)